Table of Contents

Abstract¶

This tutorial is meant as an introduction to programming 3D computer graphics. You will learn how to program a 3D rasterizer (one of many different approaches to implementing 3D computer graphics): this isn't about programming a game or using the latest HTML5 features. It is, however, meant as a way to introduce you to how 3D computer graphics work "under the hood". Essentially this tutorial walks you through what OpenGL and DirectX have implemented in their respective code-base (and drivers), without system or library specific overhead.

Introduction¶

About & Goals¶

Welcome - you are about to embark on a fascinating journey through the world of 3D computer graphics for programmers! What are 3D graphics and why should I, as a programmer, care? 3D graphics are graphics in the third dimension / space, usually rendered onto a 2D (flat) surface. This is essentially the process of taking a 3D world from a video game and displaying it to a user's screen. Sounds trivial? It isn't, but don't be deterred - having the knowledge of how this is done will make you a top-notch computer graphics programmer. 3D computer graphics have many applications well outside of video games: medical imagery, robotics research, data visualization, etc.

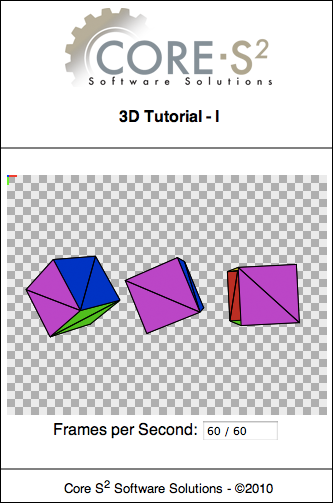

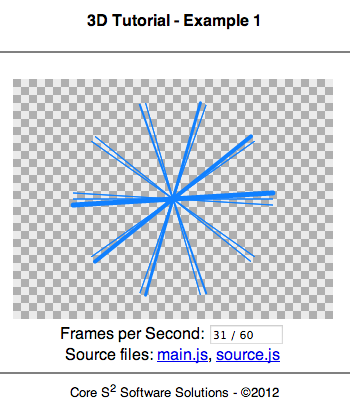

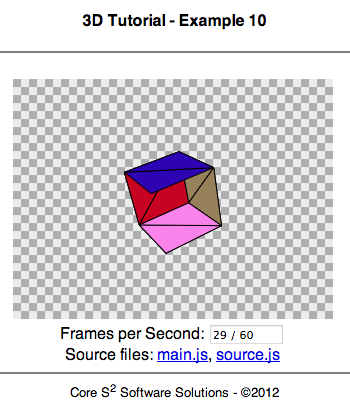

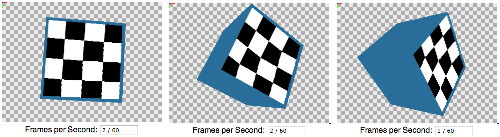

A preview of what's to come: though many lessons will render only simple geometry, everything learned can easily be applied to complex scenes and geometry.

"What's this about OpenGL, DirectX, and game engines I keep hearing about?" Great question! Those are actually two separate categories: computer graphic libraries take given geometry, images, lighting information, etc. and generate a 2D image output. What we are covering in this article is how the internals of those libraries work: how exactly does a computer calculate where and how to draw a cube, or other arbitrary graphics data? The second category would be game engines, which are generally a set of other libraries (user input, audio, texture loading, etc.) making your rendering engine more interactive to the user.

This tutorial will walk you through how 3D rendering works at its core: we purposely avoid using any existing code or libraries to keep all rendering steps visible to you. You should be warned at this point that this tutorial, unlike what you will commonly find on the web, is not to teach you how to use 3D engines or libraries. Instead, if you want to write your own rendering system and understand how OpenGL and DirectX work internally, this tutorial is perfect for you!

Before going into the actual tutorial, there are a few things you should ask yourself and understand. A big question is why use 3D in the first place? Simple: When you draw an image of a car on paper, it’s only an image of one view facing the car. If you draw a 3D model of a car, you can move and rotate your view in any number of ways and render an almost infinite number of images from it. Instead of having an artist make one texture (the car image), they can instead make a 3D model that can be presented to the user from any position and angle possible. There are also a dozen other benefits, such as making animated films more realistic, adding depth to gameplay mechanisms, make interactive media much more life-like, and more.

In this tutorial, we will be creating a software 3D renderer in JavaScript using the HTML5 Canvas tag. What this means is that we will be creating a 3D rendering system, written in JavaScript, that does not use any hardware acceleration (i.e. the use of any hardware features outside of standard JavaScript), and the output is placed into the HTML5 Canvas tag (a dynamic 2D rendering surface). This software renderer will be a "fixed-pipeline", where we do not dynamically redefine how projection transformation and per-pixel operators work at run-time. The feature that allows us to do this is called Shaders, and is well beyond the scope of this article, though an important topic to know when using hardware-accelerated graphics libraries.

This tutorial will not use WebGL, the modern standard for browser-based 3D graphics, because the goal here is to teach how 3D rasterization works, not how to use an existing library. JavaScript was chosen since most users have a web browser capable of quickly executing JavaScript. JavaScript also lowers the entry-barrier to this tutorial, since it isn't system-specific and does not require special software that doesn't already exist on almost every computer. Note that this tutorial will still require a modern browser, such as Chrome, FireFox, Opera, IE 9+, etc. because of the need to support the HTML5 Canvas tag.

You should know the basics of JavaScript (ECMAScript) or some sort of C-like programming languages (C++, C#, Java, Objective-C, PHP, etc... since this article takes an imperative programming language approach to JavaScript). If you need help getting started with JavaScript, check out Mozilla's great JavaScript guide or the lighter Mozilla Re-Introduction to JavaScript. W3School's tutorials are also great casual introductions to JavaScript. Also, it is highly recommended that you are comfortable with the basics of trigonometry. Knowledge of linear algebra (matrix manipulation) will be even more helpful.

Computer Graphics¶

Computer graphics is the process of rendering graphics through computers. It's as simple as that, but the field includes many interesting topics and subjects well outside of just "video game graphics". Texture synthesis (creating a new texture from scratch), world generation (creating a world through algorithms), face detection (finding people's faces in a photo) are all within the domain of computer graphics.

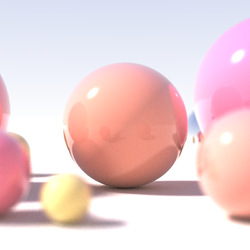

Computer graphics can be basically put (this is a big trivialization) into two categories: real-time and realistic computer graphics. Real-time simply means that the application can be manipulated and changed without any sort of latency or slow response, hence the term "real-time". The advantage is that your scenes are rendered fast as well as can be interactive, though the tradeoff is that you lose realism because of optimizations. Classic examples of this are most 3D rasterized video games; Doom, Quake, etc. On the other hand, realistic computer graphics are very detailed and can be life-like, internally simulating the way optics work down to per-pixel levels. Rendering these scenes take many hours of intensive computation, but produce very clean and sharp images. Another way of remember the difference is that real-time graphics tends to work by drawing polygons (generally triangles) on-screen, which can be done very quickly. Realistic graphics goes a step beyond that, drawing the scene through pixel-per-pixel filling.

It is important to note that there are many different approaches to real-time 3D computer graphics, with each method having their respective strengths and weaknesses. Scanline rendering does a top-to-bottom image row check for possible collisions amongst scene objects. Though good for accurate depth-detection, the algorithm is slow because it requires iterating through all scene models (though there are ways of speeding this up). A similar method, called Ray-casting, used in Wolfenstein 3-D, renders scenes in a similar way, but has a subtle trick for fast run-time performance: instead of checking per-pixel, the camera only checks pixels along the image's middle horizon: if a collision is observed on a point on that horizon, then the vertical scale of walls are changed in respect to that distance. In essence, it's a way to very quickly draw uniform walls, but it fails at dealing with complex geometry. Voxels, recently made famous by Minecraft (though interestingly, Minecraft's output is through a polygon rasterizer using OpenGL), are fast for data reading and writing, but give a block appearance. There are tricks to change the output to a more smooth-like surface, but that tends to be computationally intensive because of the cubic growth of data based on scene size. This list is by no means complete, but gives a general overview of common methods, with the one we are talking about to be described below:

Polygon rasterization is the most common approach for real-time computer graphics. Though not remarkably modern, it has one subtle advantage that has made it the king of real-time graphics: it can be massively parallelized. Rasterization is the process of taking a simple geometric primitive (the most simple being a triangle, more on that later), and map it from 3D space to 2D space. This also includes scaling, rotation, and other changes on the points, but the idea is that for each point that makes up a triangle, where a group of triangles form a mesh, all points are stateless: drawing one point doesn't affect how the other is drawn. Thus, we can compute this data in parallel, and that is exactly what graphics cards do: the reason why we have hardware acceleration is that CPUs are great at doing one thing at a time per core, yet graphics cards, though "slower" in terms of single-operation computational speed, have massively higher throughout because they can compute hundreds of thousands of points in parallel.

An example, from the rasterization-based Irrlicht engine, on the left, and an example ray-tracer with depth-of-field enabled, on the right.

Our approach will be to program a rasterizer, taking triangles (forming arbitrary models) in 3D world-space, and mapping them out to 2D screen-space. This is called a "software renderer" because we aren't using hardware acceleration that is provided by OpenGL and DirectX. Instead, we are going to implement all of these low-level steps in our own code as an academic exercise, and have it be executed one step at a time like what a single-threaded processor does.

You may be wondering why we use dedicated hardware to just raster triangles, but not implement ray-tracing, since I've mentioned this special hardware is all about mass-parallelization? Ray-tracing is much more complex than casting a single ray for each pixel: ray collisions need to look around for light sources, compute the reflection and refraction of materials, and detect shadows. Simply, ray-tracing is far too computationally intensive, while rasterization is still considered a good balance between computational complexity and visual results. What is interesting is that every day we are getting closer to producing cheap hardware that is powerful enough to do ray-casting in real-time. We are close to cheap real-time ray-tracing methods and hardware.

Regardless of the method chosen to render a scene, the output is generally a single 2D image. Our human eyes are very interesting in that if a set of images are iterated through to us, at fast enough speeds, we cannot distinguish between individual images. This turns out to be a good thing, as our brains stop interpreting those individual images and starts interpreting them as small changes, as an "animation": this is where the term movie ("moving images") comes from. In computer graphics, to produce animations in real-time, we render individual images and show them very quickly to the user, usually between 24 to 60 frames a second depending on several desired output properties. The higher the frame rate, the smoother the animations look, but the more work has to be done for the computer. 24 frames a second are considered a general "minimum speed" that will appear "smooth enough" to the average observer to look like a video. This article will focus on real-time animations: we will render to a single image, present that image, then render the next image. We will design our code to do this 60 times a second, thus have a 60 FPS target, and create a true real-time 3D rasterizer.

Development Tools & Conventions¶

Before getting into the actual code itself, we have to choose what language to program in, and how to draw the basics. For the sake of simplicity, we will choose JavaScript and the HTML 5 Canvas element. JavaScript is commonly used for developing interactive websites, but it is also a powerful scripting language. Though it is not known for its speed, it does have other benefits such as dynamic typing and a C-like syntax. Canvas, the HTML element we will render onto, is perfect for this tutorial: most browsers support it, and it doesn’t require any complex resource management unlike creating a native Windows or Linux graphical application. Another reason why we are choosing to use this Canvas and JavaScript combination is that it doesn’t require anything for you to install. This project should be ready to work on without any overhead!

Setting up your development environment is easy since you probably already have everything you need. To program along with this tutorial, you just need a web-browser and a text editor. All you need to do, from this point on, is open up a text editor and start writing down your source code as we walk through this tutorial, save the file as some sort of web page (usually ends with *.html or *.htm) and open it with your browser of choice. It might be more convenient, especially if you need to debug code, to install a true JavaScript IDE (Integrated Development Environment). I'm leaving it up to you to decide what is best for your needs.

Our goal is to keep this tutorial as simple and easy to understand as possible. Because of this, much of the code I will be writing will not be optimal, so that it is easy to read for even novice programmers. I will also write as many comments in the code as possible to make every step that isn't intuitive more clear.

Our coding style will be block-based and use camel notation, where local variables are capitalized, members variables are also capitalized, and globals are all caps. This is simply our style, and does not reflect any sort of design choice other than to try and keep things as simple as possible. I do include more boilerplate code than usual, but this is to keep the code as reasonably understandable as possible.

Throughout this document, there will be many links and comments to other articles on the web. To separate these notes from the main article, I've implemented a "notes" box, which looks like the following:

Note

This is a note!

Keep an eye on these, and make sure to read them while going through the article, but don't worry about completely understand the content as they are merely notes and not critical to understanding the main material.

Throughout the article, there will be checkpoints where you can download the source as a whole. Those points are marked with a download icon & link:

checkpoint

Naturally, this article will have to make many references to equations, but representing them in HTML is challenging since there are no helpful tools outside of generating images (which in some cases, is required). Instead, I've written most equations as cleanly as possible in mono-space font with an explanation of the equation as a whole and what each variable stands for.

(a + b)^2 = a^2 + 2ab + b^2

A simple power-of-two expansion over a the sum of variables "a" and "b".

Any inline source code will be marked-up visually using the SHJS (Syntax Highlighting in JavaScript) tool, as seen below:

// This function returns n multiplied by 2, and then returns the result added by one

function ExampleCode(n)

{

n *= 2;

return n + 1;

}

Finally, all internal and external links include a link icon that looks like this: http://www.cores2.com. This is to help you find resources here and external references outside the scope of this document.

Development Platform¶

HTML Rendering Page¶

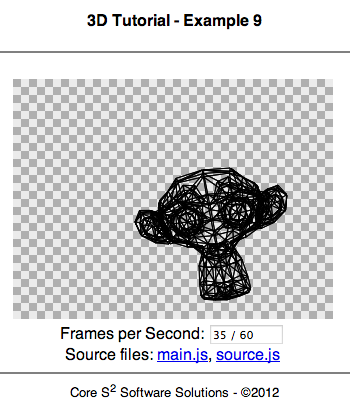

To begin with, we need a simple HTML page that contains an HTML5 Canvas element and a text field to update the FPS (frames per second) counter. To start with, create an empty text document, and copy-paste the following:

Download the "index.htm" source code here.

<html> <!-- Standard head source --> <head> <style type="text/css"> body { font-family: Helvetica; } </style> <title>3D Tutorial - Examples</title> <script language="JavaScript" type="application/JavaScript" src="main.js"></script> <script language="JavaScript" type="application/JavaScript" src="source.js"></script> </head> <!-- When the body loads, let us call the main application entry point --> <body onload="Main()"> <!-- Center content --> <div style="text-align: center;"> <!-- Title --> <img src="CoreS2Logo.png" /> <h4>3D Tutorial - Examples</h4> <hr noshade/> <br/> <!-- Canvas Content --> <a href="source.js"> <canvas id="SampleCanvas" width="320" height="240"> Sorry, but it looks like your browser does not support the Canvas tag. </canvas> </a> <br/> <!-- FPS Counter--> Frames per Second: <input type="text" name="FPSTextBox" id="FPSTextBox" readonly="readonly" size=10 value="Calculating..." /> <!-- Footer --> <br/> <br/> <hr noshade/> <div style="font-size: small;"> <a href="www.cores2.com" style="text-decoration: none; color: black;">Core S<sup>2</sup> Software Solutions - ©2012</a> </div> <!-- End of center --> </div> </body> </html>

Save the file as "index.htm", or whatever name you prefer as long as it is an HTML document, then load it in a browser. If you see a "Sorry, but it looks like your browser does not support the Canvas tag.", then it simply means your browser is not one that supports the HTML5 tag, so refer to the introduction section to learn more about what you should install. Otherwise, you should just see a blank space where the empty Canvas element is.

The code above pretty simple, with only a handful of important things to take a look at. You'll notice first that we load two scripts:

<script language="JavaScript" type="application/JavaScript" src="main.js"></script> <script language="JavaScript" type="application/JavaScript" src="source.js"></script>

"main.js" is a general wrapper, that will be introduced in the next section, that creates a simple framework for us to draw in. It gives us simplified line-drawing functions, as well as the FPS counter system that gets presented as text into the text field defined later in the document.

The second source file, "source.js", is where all the magic you make will happen! This is the only text file you will be working in, and this entire tutorial revolves around it: if you want, save different versions of the file to keep track of your code changes, and all you have to do is change the filename in the above lines to keep your HTML renderer page updates.

<body onload="Main()">

The above code defines what function to execute (in this case "Main()"), from the previously mentioned source files. Again, the behavior of that function will be defined in the following section, but this is really our true program entry point, where some simple work is done to keep the programming environment easy for you.

<canvas id="SampleCanvas" width="320" height="240"> Frames per Second: <input type="text" name="FPSTextBox" id="FPSTextBox" readonly="readonly" size=10 value="Calculating..." />

The Canvas element, named "sampleCanvas" is our target drawing surface. Everything we do will output strictly to this surface. Though you can change its size, it's best to leave those alone to keep your code and this tutorial's code as similar as possible, to help with debugging later on. The second element, named "FPSTextBox" is our frames-per-second output text field, where it is updated through a simple frames-per-second counter implemented in the starting framework. Notice how it is a read-only field, since we don't want to deal with any sort of user manipulation, as it is a very simple "output" of sorts.

Canvas setup¶

As mentioned in the previous section, "main.js", will be our generic wrapper framework, that simplifies a few functions that we will commonly use in our tutorial, like drawing pixels, lines, filling in surfaces, etc. Our second source file, "sample.js", will be where all of your code will be written. You can write your code in any way you want, however you want, in that source file, as long as it defines two required functions: "Init()", which is used for your own custom initialization of the scene and/or data, and "RenderScene()", which is where you are expected to do all of your own drawing! The rendering loop is pretty simple: our HTML page, on load, calls a function named "Main()" which is defined in "main.js". This does some internal initialization, which we will discuss shortly. In this function, after it completes its own work, it calls your own initialization function named "Init()". From that point on, the code will keep looping at a target speed of 60 times a second, calling your custom function "RenderScene()".

Note

Why do we "target" a frame-rate speed and not go as fast as possible? Modern Operating Systems are multi-process, meaning that there are multiple processes (applications) running at a time. Since processors typically can't run all of those user processes truly at the same time (processors typically work one step at a time), there is a sort of Time-Sharing / Multitasking manager that gives processes little chunks of time, then work on another process, repeating this so fast that the human user can't tell the difference between fast switching and everything working in parallel. Part of the time-sharing algorithm deals with the computational intensity of applications: the more intensive an application is, the more time is given to it (up to a certain point, so that it doesn't "freeze" the system by consuming too much time, preventing basic and important services from ever working). Thus, if we let our code execute as fast as possible, it would be considered a computationally intensive program and would burden the system more than it really needs to be. Sure, we could execute our code very quickly, over and over again, but there is no benefit to the user since anything past 60 frames per second is not noticeably better to the human eye. Later on we will discuss how 60 FPS is even too high, since the human eye stops seeing still images at around 24 to 30 FPS. In our case, we limit ourselves to be nice to other processes, allow the host Operating System time to do other work, and simply because we don't need to go as fast as possible once past a minimum frame speed.

Since "sample.js" requires you to implement at minimum two functions (the "Init()" for initialization, and "RenderScene()" for drawing work), the below code is a great place to start: It implements everything you need, and even draws a background for you (more on that later). Copy-paste this code into a new text file, save this text file as "sample.js", and make sure to save it to the same directory as your previous saved HTML document.

Download the "source.js" source code here.

function Init()

{

// Left empty on purpose...

}

function RenderScene()

{

// Left empty on purpose...

}

Now comes the simple framework of functions we will use throughout this tutorial. "main.js" is left un-minimized (i.e. intentionally verbose sans optimization), to let you read through the code as easily as possible. Though we will be talking about how some of these functions are implemented internally, I leave it up to you to step through the code if you wish to learn more, but essentially all that is being done is a simplification of the HTML5 Canvas rendering functions. What you should take away from the following code are the core drawing functions, which will make more sense after reading through the section on 2D graphics:

function RenderPoint(integer x, integer y, integer width, [integer r,g,b] color)

Draws a single point, of size "width", at the given screen position, of the given color.

function RenderLine(integer x1, integer y1, integer x2, integer y2, integer width, [integer r,g,b] color)

Draws a line, from point (x1, y1) to point (x2, y2), of the given width and color.

function RenderTriangle(integer x1, integer y1, integer x2, integer y2, integer x3, integer y3, integer width, [integer r,g,b] color)

Draws a triangle's outline, from point (x1, y1) to point (x2, y2) to point (x3, y3), of the given width and color.

function RenderFillTriangle(integer x1, integer y1, integer x2, integer y2, integer x3, integer y3, [integer r,g,b] color)

Draws a triangle, from point (x1, y1) to point (x2, y2) to point (x3, y3), filling it with the color.

var ContextHandle

A global variable, which is the canvas handle for the current scene.

var CanvasWidth

A global variable, which is the current canvas width in pixels.

var CanvasHeight

A global variable, which is the current canvas height in pixels.

Download the "main.js" source code here.

/***************************************************************

3D Tutorial - Core S2 Software Solutions -Copyright 2012

Learn more at www.cores2.com

This source file is developed and maintained by:

+ Jeremy Bridon jbridon@cores2.com

File:

main.js

Description:

Main application entry point; connects to the canvas HTML 5

element and starts the rendering cycle, managing performance

and throttling as needed. Also does double-buffering by creating

and swapping an internal Canvas buffer.

To interface with this, you must create another JavaScript

file that implements the "Init()" and "RenderScene()"

functions.

***************************************************************/

/*** Public Variables (Read Only) ***/

// Global timer used for animations; grows over time

// Measured as fractions of seconds

var TotalTime = 0.0;

// Target frames per second, measured in fractions of seconds

const TargetFrameTime = 1.0 / 60.0;

// Global canvas width and heights

var CanvasWidth;

var CanvasHeight;

// Global screen centers

var CenterX;

var CenterY;

/*** Internal Functions & Variables ***/

// FPS counter, refresh rate, and internal timer

var FrameRateTime = 0; // Seconds elapsed since last FPS post

var FrameRateCount = 0; // Number of frames since last FPS post

var FrameRateRefresh = 1; // Time interval between each FPS post

// Global canvas and graphics handle

var CanvasHandle = null;

var ContextHandle = null;

// Backbuffer canvas handle

var BackCanvasHandle = null;

var BackContextHandle = null;

// Main application entry point; this MUST be called before any other functions

// Calls the user overloaded "Init(...)" function and starts

// the main render loop

function Main()

{

// Get context handles

CanvasHandle = document.getElementById("SampleCanvas");

ContextHandle = CanvasHandle.getContext("2d");

// Get the canvas size

CanvasWidth = ContextHandle.canvas.clientWidth;

CanvasHeight = ContextHandle.canvas.clientHeight;

// Get the canvas center

CenterX = CanvasWidth / 2;

CenterY = CanvasHeight / 2;

// Create an image backbuffer

BackCanvasHandle = document.createElement("canvas");

BackCanvasHandle.width = CanvasWidth;

BackCanvasHandle.height = CanvasHeight;

BackContextHandle = BackCanvasHandle.getContext("2d");

// Call the custom init function

Init();

// Start the render cycle

RenderLoop();

}

// Main render loop

// This should setup a timer at the end to call itself again

// This function throttles itself to only update at a target FPS

function RenderLoop()

{

// Start timing this render cycle

var StartTime = new Date();

// Clear backbuffer

BackContextHandle.clearRect(0, 0, CanvasWidth, CanvasHeight);

// Save context state

BackContextHandle.save();

// Render the scene

RenderScene(BackContextHandle);

// Restore the context state

BackContextHandle.restore();

// Swap the backbuffer with the frontbuffer

// We take the contents of the backbuffer and draw onto the front buffer

var ImageData = BackContextHandle.getImageData(0, 0, CanvasWidth, CanvasHeight);

ContextHandle.putImageData(ImageData, 0, 0);

// End time

var EndTime = new Date();

// Measure the difference

// Note that "value of" returns millis, we divide back into seconds

var TimeElapsed = (EndTime.valueOf() - StartTime.valueOf()) / 1000;

var SleepTime = TargetFrameTime - TimeElapsed;

// If target sleep time is negative, simply don't sleep

// This is in cases where we take longer than intended to render a scene

if(SleepTime < 0)

SleepTime = 0;

// Calculate the cycle time of how long it took to execute this frame

var CycleTime = TimeElapsed + SleepTime;

// Calculate FPS when needed

FrameRateTime += CycleTime;

if (FrameRateTime >= FrameRateRefresh)

{

// Post FPS

var FPS = FrameRateCount / FrameRateRefresh;

document.getElementById("FPSTextBox").value = FPS + " / " + (1 / TargetFrameTime);

// Reset time and frame count

FrameRateTime = 0;

FrameRateCount = 0;

}

// Grow frame count

FrameRateCount++;

// Callback to self after sleep-off time

// Note that we convert back to seconds and then set this sleeping function

TotalTime += CycleTime;

setTimeout(RenderLoop, SleepTime * 1000);

}

/*** Graphics Primitive Wrappers ***/

// Render a point given a point and a color

function RenderPoint(x, y, width, color)

{

// Shortext context handle

var ctx = BackContextHandle;

// Save context

ctx.save();

// Set color

if(color != undefined)

ctx.fillStyle = "rgb(" + color.R + "," + color.G + "," + color.B + ")";

else

ctx.fillStyle = "rgb(0, 0, 0)";

// Draw from point to point

ctx.fillRect(x - width/2, y - width/2, width, width);

// Revert context

ctx.restore();

// Done rendering line

}

// Render a line given two points, a width, and a color

function RenderLine(x1, y1, x2, y2, width, color)

{

// Shortext context handle

var ctx = BackContextHandle;

// Save context

ctx.save();

// Set width and cap style

ctx.lineWidth = width;

ctx.lineCap = "butt";

ctx.lineJoin = "round";

// Set color

if(color != undefined)

ctx.strokeStyle = "rgb(" + color.R + "," + color.G + "," + color.B + ")";

else

ctx.strokeStyle = "rgb(0, 0, 0)";

// Draw from point to point

ctx.beginPath();

ctx.moveTo(x1, y1);

ctx.lineTo(x2, y2);

ctx.closePath();

ctx.stroke();

// Revert context

ctx.restore();

// Done rendering line

}

// Render a triangle given three points, a width, and a color

function RenderTriangle(x1, y1, x2, y2, x3, y3, width, color)

{

// Shortext context handle

var ctx = BackContextHandle;

// Save context

ctx.save();

// Set width and cap style

ctx.lineWidth = width;

ctx.lineCap = "butt";

ctx.lineJoin = "round";

// Set color

if(color != undefined)

ctx.strokeStyle = "rgb(" + color.R + "," + color.G + "," + color.B + ")";

else

ctx.strokeStyle = "rgb(0, 0, 0)";

// Draw from point to point

ctx.beginPath();

ctx.moveTo(x1, y1);

ctx.lineTo(x2, y2);

ctx.lineTo(x3, y3);

ctx.closePath();

ctx.stroke();

// Revert context

ctx.restore();

// Done rendering triangle

}

// Render a triangle given three points, a width, and a color

function RenderFillTriangle(x1, y1, x2, y2, x3, y3, width, color)

{

// Shortext context handle

var ctx = BackContextHandle;

// Save context

ctx.save();

// Set width and cap style

ctx.lineWidth = width;

ctx.lineCap = "butt";

ctx.lineJoin = "round";

// Set color

if(color != undefined)

ctx.fillStyle = "rgb(" + color.R + "," + color.G + "," + color.B + ")";

else

ctx.fillStyle = "rgb(0, 0, 0)";

// Draw from point to point

ctx.beginPath();

ctx.moveTo(x1, y1);

ctx.lineTo(x2, y2);

ctx.lineTo(x3, y3);

ctx.closePath();

ctx.fill();

// Revert context

ctx.restore();

// Done rendering triangle

}

// Render a checkered background (Colors are set internally)

function RenderBackground()

{

// Shortext context handle

var ctx = BackContextHandle;

// Draw a checkered light background

var SquareSize = 8;

// Draw an error background

ctx.fillStyle = "rgb(8, 32, 128)";

ctx.fillRect(0, 0, CanvasWidth, CanvasHeight);

// For each screen chunk

for (var y = 0; y < Math.floor((CanvasHeight + SquareSize) / SquareSize); y++)

{

for (var x = 0; x < Math.floor((CanvasWidth + SquareSize) / SquareSize); x++)

{

// Select the color based on positions

var TargetColor = { R: 175, G: 175, B: 175 };

// If we are in a lighter square positions, make color lighter

if (x % 2 != y % 2)

TargetColor.R = TargetColor.G = TargetColor.B = 235;

// Render recntagle

ctx.fillStyle = "rgb(" + TargetColor.R + "," + TargetColor.G + "," + TargetColor.B + ")";

ctx.fillRect(x * SquareSize, y * SquareSize, SquareSize, SquareSize);

}

}

// Done rendering background

}

Note

You'll probably be wondering why do we have two canvas and context handles, and why are they called foreground and background, respectively? This is done to implement a Back-buffer / Frame-buffer. Back-buffering will be discussed in detail later, but to those eager to understand, it is a way to buffer all of our drawing code into a hidden surface, then present that as a whole to the user. It prevents the users from seeing parts of geometry being drawn step-by-step since drawing is not instantaneous, so we batch up our drawing commands and only display the final results once all drawing is complete.

Thus far, you should have three files in your tutorial folder: index.htm, main.js, and source.js. Make sure that you have all of these and that at minimum you see an HTML document with a blank canvas in the middle.

Before moving on, let's give you a taste of what's to come: we're going to implement a very simple line-rotation effect! In your "source.js", within the "RenderScene()" function, copy-paste the following code:

function Init()

{

// Left empty on purpose...

}

function RenderScene()

{

// Render the background

RenderBackground(ContextHandle);

// Find the center of the image

var CenterX = CanvasWidth / 2;

var CenterY = CanvasHeight / 2;

// Render some rotating lines

var TotalLines = 5;

for(var i = -TotalLines; i <= TotalLines; i++)

{

// Find offset corners

var OffsetX = Math.cos(TotalTime * i * (0.25 / TotalLines)) * 100;

var OffsetY = Math.sin(TotalTime * i * (0.25 / TotalLines)) * 100;

// Set a color then draw line

var Color = {R:16, G:128, B:256};

RenderLine(CenterX - OffsetX, CenterY - OffsetY, CenterX + OffsetX, CenterY + OffsetY, i, Color);

}

}

Once pasted, save your changes, and reload the HTML document. You should see the following:

What you should be seeing: a series of animated blue lines of varying thickness rotating about the center.

The code is pretty simple to follow when written in plain-english: we first find the center of the screen, then for 10 lines, we compute a position that is rotating around the center at a fixed distance, and finally we render these lines based from this position towards a reflected position (across the X and Y axis). Don't worry about understanding all of this just yet, since this is a very arbitrary example to get you drawing immediately. The idea here is to make sure your programming environment works!

checkpoint

2D Graphics¶

To create a 3D rasterization renderer, we must understand where we are going to actually draw the 3D! In our case, we will be drawing on simple and small 2D surface, so let's dive straight into 2D computer graphics:

Pixels¶

So what is a pixel? It is a simple graphical primitives much like a "dot of color" in an image. When many pixels are placed on a grid, an image can be generated. Pixels can either mean the conceptual "basic single color square" concept found in digital images, or can mean the physical color component in a device like a TV, LCD monitor, etc. In our case, we care about the virtual pixel, since we will be writing to a 2D image in a browser.

Pixels, in the digital world, can represent color by a combination of color channels: a combination of red, green, and blue (referred to as the RGB color model, though sometimes other color models exist), can be used to produce a unique color. Based on the range of each channel, we can represent thousands, millions, and easily billions of colors! Computers, being digital, represent each color value, or intensity, as an integer, commonly from 0 to 255. Thus, each color is a single "byte" of digital information. Three of these bytes, and a fourth byte representing alpha (transparency), comes to a total of 32-bits, which is where the term "32-bit color depth" comes from. There are of course other ways to represent color values, though most common and most simple to understand is this integer color depth. As an example, black, which is the sum of no intensities, can be represented by (0, 0, 0), or 0 intensity for red, 0 intensity for green, and 0 intensity for blue. White, on the other hand, is the sum of full intensities, and thus can be represented by (255, 255, 255). Note that as just mentioned we can represent colors in other ways: another very common way is to represent the range through a floating-point value between 0 and 1, thus black is still (0, 0, 0), but white is now (1, 1, 1). Remember, combinations are what produces all other colors: Purple can be represented as (159, 0, 197), using the 32-bit scheme.

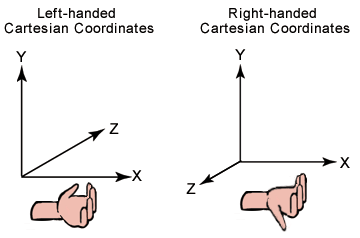

Pixels are not only color values, but also positions. All 2D images can be mapped by coordinates using the cartesian coordinate system, but the conventions and origin of such a system depend on the framework and conventions you are using. OpenGL uses the left-hand coordinate system: the X and Y origin are in the bottom-left of your screen, with the X position growing towards the right, and the Y position growing upwards. Z, the third dimension since OpenGL is a 3D framework, grows from the viewer and into the screen, but this is irrelevant to us until later. DirectX uses a right-handed coordinate system, thus the Z axis grows towards the screen from the user.

The terms "left handed" and "right handed" define where the Z axis grows towards: take your hand, and curl it from the X-axis towards the Y-axis. Wherever your thumb points to, is the direction of the Z axis for the respective system. Taken from MSDN Direct X 9 documentation.

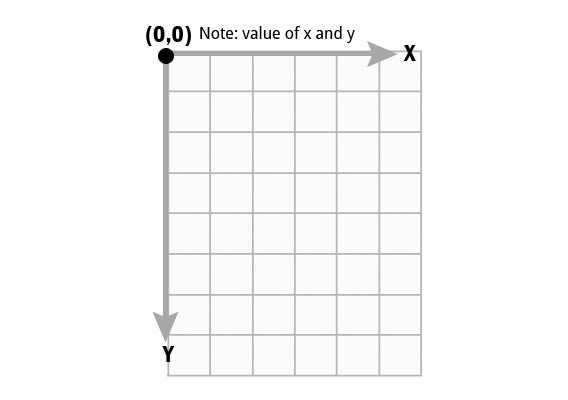

In our case, Canvas is a little different: the origin is in the top-left, with X growing to the right and Y growing to the bottom. Later on, when we define our 3D world, we will use the left-hand notation.

The origin (0,0) is in the top left, with the point (100, 0) on the top-right, (0, 100) on the bottom-left, and (100, 100) in the bottom-right.

Let's do an example: say we want to draw a 100 points randomly across the screen every time we have our RenderScene(...) function called: though it'll look like a random static image (similar to old analog TV's when you set the channel to an empty station), the idea here is to just draw pixels at random locations.

// Custom init function for this demo

function Init()

{

// Nothing to initialize

}

// Main render scene function; called by the RenderLoop() cycle

function RenderScene()

{

// Render the background

RenderBackground(ContextHandle);

// For 100 points

for(var i = 0; i < 100; i++)

{

var x = Math.random() * CanvasWidth;

var y = Math.random() * CanvasHeight;

RenderPoint(x, y, 2);

}

}

checkpoint

Lines¶

Lines are the next step up in the 2D world from pixels: they are a series of pixels that fill the distance between two points. When it comes to computer graphics, drawing lines is a critically important basis for any 3D renderer: it can help with the actual rendering itself, with GUI rendering, debugging, etc. Though conceptually a trivial idea, lines are remarkably complex to implement as efficiently as possible in software. That is to say that lines are easy to draw, using the slope-intercept formula, but the easiest (trivial) solutions tends to be slow, and the most efficient approaches are quite complex to understand. Fortunately for us, Canvas abstracts line rendering and provides us a friendly function (with which we wrap in our own simplified call in "main.js"). If you are interested in the challenge of implementing a line rendering function, read both this fantastic article on real-world results from line drawing, and the Wikipedia article on line drawing algorithms (the Wikipedia article is great since it also shows good pseudocode).

What you should understand, and hopefully appreciate, is that the basic line renderer algorithm shouldn't be used this since it is a naive and slow approach, and that our framework code directly calls the Canvas implementation of line-drawing, which should be very fast.

Note

Algorithms and computational complexity are important subjects well outside of the scope of this article, but are enormously important for any and all software projects. A simple but clean-cut definition of an algorithm is that it is a series of steps to take to deal with some sort of input, and give you a desired result. Computational complexity is how easy or hard, relative to known categories, a given algorithm or process is to execute. All of this is relevant to you, because programming is essentially writing an algorithm in a formal computer-programming language. Computational complexity is also critically important to you because computer graphics can be a computationally intensive problem, and thus being aware of fast algorithms and complexity classes can result in better performance. In-fact, as you will see later in this tutorial, you can swap some functions' implementations (i.e. change their algorithms) for massive speed increases, or use significantly less memory, or gain other benefits! We choose not to use the "best" algorithm, since naive approaches may be slow but are very easy to understand. If you are able to master algorithms and computational complexity as a subject matter, not only does that make you a good developer, but makes you a top-notch software engineer!

As an example, let's draw a single line that has one point go from the origin, and another point rotate around the middle of the screen. This will require some basic trigonometry to compute the position on a circle's radius, which represents rotation around the center of the image, but it's nothing hard:

// Simple growing variable

var Time = 0.0;

// Custom init function for this demo

function Init()

{

// Nothing to initialize

}

// Main render scene function; called by the RenderLoop() cycle

function RenderScene()

{

// Render the background

RenderBackground(ContextHandle);

// Grow time

Time += 0.1;

// Compute screen centers

var CenterX = CanvasWidth / 2;

var CenterY = CanvasHeight / 2;

// Draw a line from the origin (0, 0) to a position on a circle around the center

RenderLine(0, 0, CenterX + Math.cos(Time) * 20.0, CenterY + Math.sin(Time) * 20.0, 2);

}

checkpoint

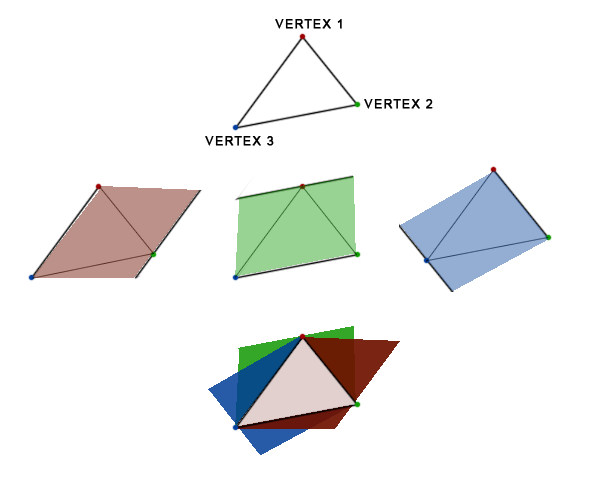

Triangles & Polygons¶

Triangles are the next important drawing primitive in our computer graphics toolkit! Triangles are powerful for two important reasons in the world of computer graphics: they are the simplest space-filling shape that can be drawn with beneficial geometry properties, and that they can be filled quickly by a computer! Triangles, being only composed of three points, can never overlap itself, unlike a n-point polygon, where n is greater than 3. This allows the computer to not have to check for any special cases of wether or not to fill a given pixel, and it also allows the computer to just fill a single row, being guaranteed that each row of pixels filling a triangle only have one continuous row, and never more. This way when complex shapes like n-point polygons are needed, you can just render it out as a series of triangles, and not worry about developing complex n-point polygon rendering code. Triangle filling also has the advantage of being done very quickly due to hardware acceleration: graphics cards are great at doing many smaller tasks (like filling a row of pixels in a triangle) in parallel!

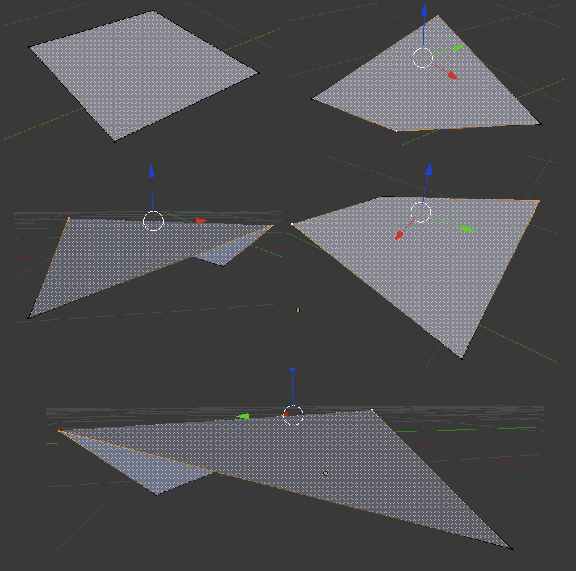

Triangles also have a nice property in the 3D world: you can move the triangle's points in any way you want in 3D-space, in infinite combinations, but triangles will never "fold" on themselves, unlike more complex shapes like rectangles or polygons. This guarantees that an object made of triangles in 3D space will always be "solid" and consistent, which more simply means that the behavior in any case will be clearly defined, and that a triangle always has a correct "facing" direction.

To show that using squares for basic surfaces in 3D-space, consider this thought-experiment: imagine a simple 10 by 10 inch square object, completely flat, floating in space, where the edges are solid but the corners allow edge movement. This square, by definitions, has four points. Pick two points, opposite of each other and leave the two remaining points alone. Try to pinch the two points you've selected, while the remaining two points stay in the same position, and while you are moving the pinched-points towards you. It will look like two triangles, like a folded square napkin, where the base of the two triangles are connected (the two points you left stuck in space), and the two points you took to pinch are pointing towards you. Now ask the critically important question: what is the "surface" of this geometry? Is it one of the two triangle planes facing one-another, or is it one of the two planes facing away? If it is either of these two, which of the surfaces is the correct surface, since they point in different directions? The simple fact is, n-point polygons (n being more than 3) leads to too many undefined behaviors when moving around, thus we use triangles because they are always consistent. You'll learn quickly that all polygons, and thus all models, can be represented by a series of triangles. Thus, this thought-experiment could be re-done with two triangles from the start, which is perfectly fine when it comes to the rendering mechanisms, because that square won't be seen as a square, but will be seen as two triangles to render, which for the computer makes a world of difference.

See how when we pinch and stretch away points on the square, there are some weird rendering behaviors? Rendered in Blender.

Triangle filling is another critical feature of triangles: as previously mentioned, it can be easy and fast to fill a triangle, and many methods exist. Texturing a triangle, discussed later, is more challenging, but is still computationally fast because triangles are, by definition, convex. Thus, the filling code doesn't have to check at run time if it is ever out of bounds of the geometry; it can start filling from left to right, being guaranteed not having to do any more work! This small property gives a big run-time boost! There is a great University of Utah article from their Computer Science department on this topic, well worth a read if you are interested in a reading through a clean and concise solution.

Convex polygons can have any two points connected without every intersecting the polygon's edge. This small property is critically important in the hardware speedup for triangle filling algorithms.

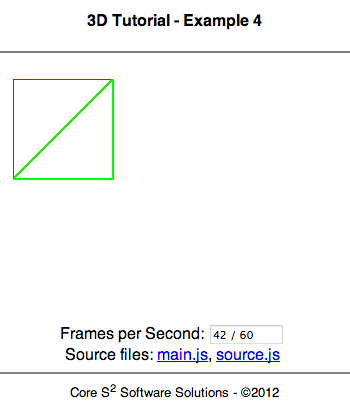

Let's draw a square using two triangles: a trivial proof-of-concept to show that two triangles can form more complex shapes:

// Custom init function for this demo

function Init()

{

// Nothing to initialize

}

// Main render scene function; called by the RenderLoop() cycle

function RenderScene()

{

// Render the background

// Removed to better show the triangle crease / collision

//RenderBackground(ContextHandle);

// Draw triangle one: top-left, to top-right, to bottom-left (red)

RenderTriangle(0, 0, 100, 0, 0, 100, 2, {R:255, G:0, B:0});

// Draw triangle one: top-right, to bottom-right, to bottom-left (green)

RenderTriangle(100, 0, 100, 100, 0, 100, 2, {R:0, G:255, B:0});

}

checkpoint

Images¶

Images are simply a two dimensional array of colored pixels. This means that it is a grid, of a given size, that has a data format for each pixel. This format is usually 32-bits per pixel, as mentioned above, with each color channel represented by a byte (8-bits) as an integer from 0 to 255). Images are important to us because we can use them as textures for 3D models and surfaces: rather than drawing a triangle with a single solid color, we may want to have a texture associated with it, giving it more detail, and that can only be done if we have a source image (though you can create images as-needed with procedurally generate images or textures).

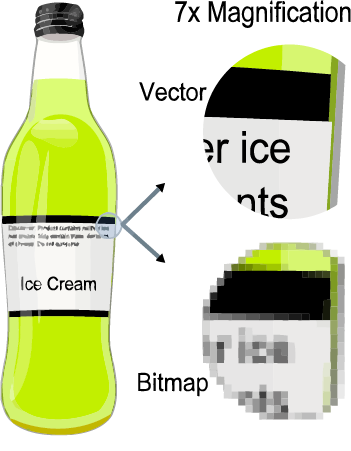

Images exist in many file formats, but the two main categories are raster images / bitmaps, or a vector formats. Raster images are just a grid of pixels, which if you zoom into have a finite set of data. Vector images are image are defined by geometric primitives, and if zoomed into, never lose detail. Though both can be used in computer graphics, usually 3D rastering frameworks use raster images because it is easier to map image pixel data to screen-space pixel data. Vector images can be used, but have to be "sampled" at run-time (i.e. converted to a bitmap, then used).

Difference between raster / bitmap images and a vector image. Taken from Wikipedia's article on Vector Graphics.

Note

Raster / bitmap image file formats also vary widely, but again fall into two major categories: Lossy compression and Lossless compression. The tradeoff between the two is accuracy and file size, but it's an important difference to know especially if you are working on graphics for an embedded system where memory is smaller or the processor/platform doesn't have good decompression support.

Screen & Double Buffer¶

When drawing directly to a surface, you will be reading and writing data step-by-step. This is to say that if you were to draw three lines, but look at the screen between each drawing event, you will literally see one line drawn at a time. HTML5 Canvas might batch your drawing commands, based on your browser's implementation, but advanced graphics libraries like OpenGL and DirectX certainly don't. This is just the nature of how graphics libraries work: if you give a draw command, unless you are directly using a batching feature, it will be executed immediately. This may seem unimportant, but if you were to draw your scene where you need to do work between drawing different features, the user may see odd rendering behavior: users may actually see objects being drawn one after the other, ruining any illusion of animation! Even if you had a rendering system so fast that users couldn't see the rendering steps, the screen must be cleared for rendering the next frame. Thus, users only see for a fraction of time the complete image, since as soon as the complete image is presented, your program clears the screen space for the next scene. Some systems, especially with HTML5 Canvas, will not stop showing an image while you are working on it. This means that in our case, more so than others, we must be aware that at any point anything we draw, even if not completed, can be shown to the user. We don't even have a locking mechanism provided by JavaScript, and even if we did we wouldn't want to stall the application just to finish our work (this goes back to an earlier note about playing nice with the host Operating System). Instead, we use "double buffering"

To resolve this, what we do is render your scene into a second screen buffer, invisible to the user. By doing this, we let the user see the previous image for as long as possible, and only when our next image is truly complete, do we swap the screens and show the completed screen. Then your code is free to trash what was previously seen by the user because that screen is no longer used. Simply put: your code should only draw to a surface that isn't visible to the user, because drawing takes time, but when you are done, you swap your screens, since screen-swapping is very quick. This prevents a "stuttering" image, or an image that flickers. You in turn maximize the amount of time the user sees a full image, and minimize the transition time between images. This is all called double buffering.

3D Graphics¶

We are finally at the meat and potatoes of this entire article: prepare for some fun code, lots of exercises, and actually getting some real 3D rendering going on!

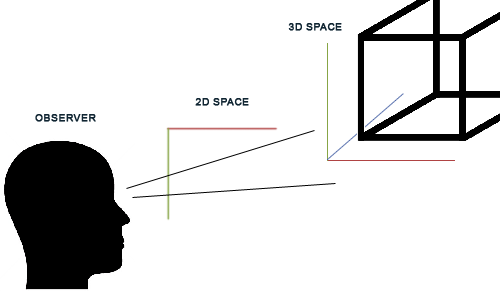

Coordinate System¶

By definition of 3D space (three-dimensional space), we have three axes of freedom to move in: X, Y, and Z. This means that, relative to the observer, a point can move left-right, up-down, and towards-or-away from your view. In our program, our goal will be to take 3D geometry (points, lines, triangles, and eventually meshes), and convert them (formally called "mapping" or "projection") into 2D space. For this to be done, we need to know where the given geometry is, and where we want to view it from. That's all we need to know to get working! To keep our systems clean and consistent, we will use the OpenGL left-hand 3D coordinate system, where positive-X grows to the right, positive-Y grows up, and positive-Z grows from the observer into the screen. We will also use the forced Canvas convention for the 2D coordinate space system: the origin is in the top-left, with positive-X growing to the right, and positive-Y growing to the bottom.

The global coordinate system: 3D space, as seen by the observer, through a 2D space.

View Projection¶

So how exactly do we "map", or "project", from 3D space into a 2D coordinate system? There are a variety of methods to do 3D projection, each used for different purposes. Orthographic projection simply removes the Z component of all geometry, and draws just their X and Y components onto the 2D surface. Though simple and easy, this creates a very odd result: no matter how far or how close the object is, it appears the same size! This is because in the real world, we have a visual perception of distance based on objects being bigger when they are close, and smaller when they are far. This is formally called visual perspective, and is exactly what we want to implement.

Note

Using different projection methods really are important for a variety of applications. Orthographic project, where you see objects "head on" without any scaling changes based on distance, is critically useful when designing mechanical components in CAD (computer aided design / drafting) software. This is because you can precisely manipulate how parts are to be manufactured as though you are working on a 2D piece of drafting paper. Other projection methods, like isomorphic projection, are useful in video games where you may need depth as an in-game element, but do not want to present it as a visual elements: this is a style common in the SNES-generation of games. Perspective-projection is the standard 3D rendering mechanism.

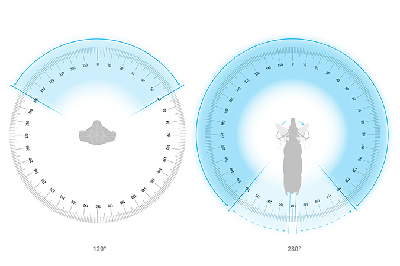

Fortunately for us, perspective projection only requires a trivial amount of extra work: the size of geometry is multiplied by the inverse of distance, multiplied by your field-of-view. Field-of-view is your visual range: how wide you can see. Humans typically have about a 100 to 180-degree view in front of us, while some animals (birds) have nearly a 360-degree view. This, by definition, affects how much we see around us without moving our heads, and thus if you field of view is big, we then see more, and have to put more on-screen, so we multiply the inverse distance by this factor so that we can "crunch" more of our 3D visible space into the 2D space.

The average human's field of view compared to that of a horse.

Now, we can directly apply the perspective projection to convert the 3D points to 2D points, but there is still an important open-ended question: how to we deal with camera movement, for both rotation and translation? This part does take some substantial knowledge of linear algebra, but we can at minimum describe it in simplified english: we take the camera movements and apply the inverse behavior to all 3D models, then apply the 3D to 2D project. Think about this, as an example of why this works: if you look at a TV screen, and move your head to your right, relative to your perception, the TV is moving to the left. If you rotate your head clockwise while looking at a TV screen, relative to your perception, the TV is rotating counter-clockwise. So all we have to do in code to deal with these movements is to apply the opposite effect. Yet, how do we implement this in math and eventually in code? Well, as just mentioned, we will have to use linear algebra (or at minimum the simplified algebraic expressions).

a2D = (a3D / distance) x (field of view)

Simplified perspective projection, to get us started.

Point¶

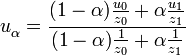

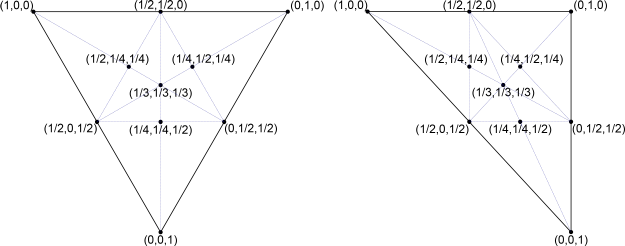

First thing first: we are going to take a 3D point, before any sort of application of projection or movement, and define it as the variable named "d", with the three-component subscripts of "dx, dy, dz". We first take this point, then rotate it about each of the axes individually. You'll notice shortly that there is an important order to this process, and it is due to the nature of multiplication / order-of-application associated with linear algebra. After this rotation, we can then apply translate: i.e. movement. Finally, at the very end, we apply the projection factor, which is the equation marked above. Wikipedia has a great graphic that shows this math in a formalized form:

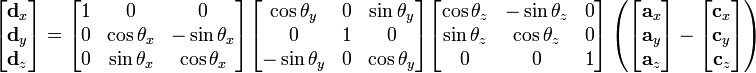

The formal camera-transformation function, in linear algebraic form, for all geometry. Link to original article from Wikipedia.

Now this equation, since it is in linear algebraic for, might seem immensely intimidating, but don't let that bother you! The following is the exact same maths, but in a simplified, less formal but more reader-friendly, form:

The formal camera-transformation function, in simplified algebraic form, for all geometry. Link to original article from Wikipedia. Note: in our code, we will simplify these terms into more readable forms!

To help you, before coding this up, let's clarify the variable names and what they mean for a scene: as previously mentioned, "dx, dy, dz", is the point in question we are trying to convert from 3D-space to a camera-corrected position. The subscripts represent the position-components of the 3D position that the point has. The variable a, with the form "ax, ay, az", is the original position of the point. Essentially d is the result, and a is the original position. The variable c, with the form "cx, cy, cz", is the camera's position. Look at the right of the linear-algebraic form: you will notice that we multiply our rotations by the distance between our 3D point and our 3D camera: this is all about moving the point in the opposite position that the camera is in. The variable theta (using Unicode: "Θ"), represents the rotation, about the respective axes, of the camera. This means that, based on our 3D coordinate system, if thetax (again, using Unicode: "Θx") is set to 45-degrees, we are rotating the camera around the x axis, pitching down (looking down) by 45-degrees.

Finally, once we have dealt with position rotation and translation, we need to apply our project to convert from this correct 3D point to a 2D screen point:

Screenx = (dx / dz) x (field of view)Screeny = (dy / dz) x (field of view)

Apply the final step in 3D to 2D projection: note how we divide not by distance, but by z? It is the same thing! We moved the 3D geometry into the correct position, thus the z-value for all positions are truly the distance from the camera.

Hopefully thus far you haven't been lost with the math, though stick with it! I freely admit when I started doing all of this 3D programming myself, I struggled over and over again until I got further into the implementation of this all into code, which is what we will now tackle.

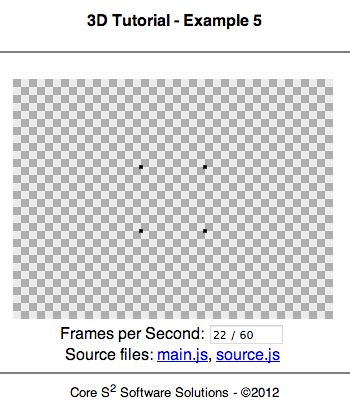

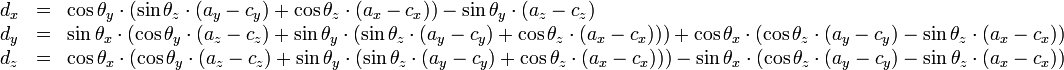

Load your text editor, and open the "source.js" source file. We are going to define the cube geometry, which consists of 8 points, with each point have three components (x, y, and z). JavaScript is great because it allows us to define both the data, and the data's structure, all at once! Create a new variable named "CubeVertex" in which we define an array of triple-floats (in global scope, so define it outside of a function). It should look like the following:

A sample cube with each vertex labeled. The grey surface is the "front" of the cube.

// Cube vertex data

var CubeVertex =

[

{x:-1, y:-1, z:1},

{x:-1, y:1, z:1},

{x:1, y:1, z:1},

{x:1, y:-1, z:1},

{x:-1, y:-1, z:-1},

{x:-1, y:1, z:-1},

{x:1, y:1, z:-1},

{x:1, y:-1, z:-1},

];

From here, we also need to define the camera's properties, which includes the position, field-of-view, and later on in this text the rotation.

// Camera position

var CameraPos = {x: 0, y: 0, z: -10};

// Camera distortion

var RatioConst = 32;

For now, let's implement the renderer without any rotation (as just mentioned), and have the cube be centered on-screen, as seen head-on:

function Init()

{

// Nothing to initialize

}

function RenderScene()

{

// Render the background

RenderBackground(ContextHandle);

// Find the center of the image

var CenterX = CanvasWidth / 2;

var CenterY = CanvasHeight / 2;

// For each vertex point

for(var i = 0; i < CubeVertex.length; i++)

{

// Convert from x,y,z to x,y

// This is called a projection transform

// We are projecting from 3D back to 2D

var ScreenX = (RatioConst * (CubeVertex[i].x - CameraPos.x)) / CubeVertex[i].z;

var ScreenY = (RatioConst * (CubeVertex[i].y - CameraPos.y)) / CubeVertex[i].z;

// Draw this point on-screen

RenderPoint(ScreenX + CenterX, ScreenY + CenterY, 3);

}

}

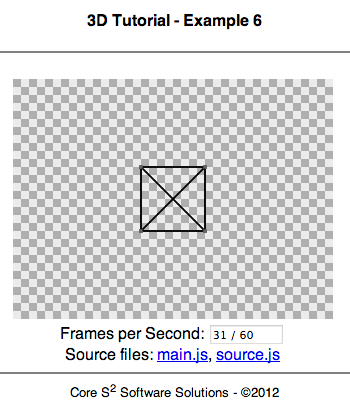

Notice how we have to add the "CenterX" and "CenterY" to each position? This is again done so that we move what the camera sees onto the center of the screen. As an experiment, leave it empty, and see the results: the cube will now be centered in the top-left of the screen, which is the true origin of our screen. Save your changes, and open your "index.htm" page or reload it. You will see 4 distinct points, which are the 8 points of the cube seen head-on (note how there are truly 8 points on-screen: every other point is paired on top of each-other because there has been no rotation, and the object isn't long enough to distinguish between the front and back points through projection distortion). If you are unable to see anything, make sure that the "index.htm" source is correctly loading your Javascript source file.

checkpoint

Geometry¶

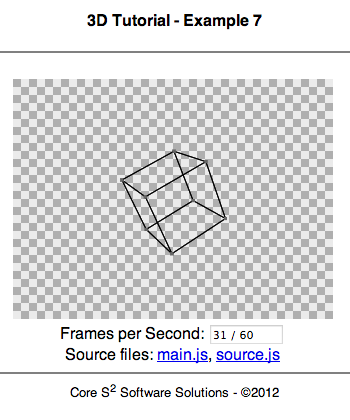

Before tackling rotation, which will come shortly, let's render a wireframe version of our cube: we must define a new array, named "CubeEdges" that tell the renderer which points of the cube should be connected with other points, forming the cube "edges".

// Cube edge data

var CubeEdges =

[

{i:0, j:1},

{i:1, j:2},

{i:2, j:3},

{i:3, j:0},

{i:4, j:5},

{i:5, j:6},

{i:6, j:7},

{i:7, j:4},

{i:0, j:4},

{i:1, j:5},

{i:2, j:6},

{i:3, j:7},

];

Instead of what we did in the previous code, where we directly rendered the point on-screen after converting it from 3D to 2D, we will instead save these points and then use their locations when rendering lines from the "CubeEdges" list.

// Create an on-screen point list we will be working with

var PointList = new Array();

// ... Previous code here ...

// In the "For each vertex point" for-loop, replace the last line that rendered a point to:

PointList[i] = {x:CenterX + ScreenX, y:CenterY + ScreenY};

// ... After the above-mentioned loop, add our line iteration loop: ...

// For each edge

for(var i = 0; i < CubeEdges.length; i++)

{

// Find the two points we are working on

var Point1 = PointList[CubeEdges[i].i];

var Point2 = PointList[CubeEdges[i].j];

// Render the edge by looking up our vertex list

RenderLine(Point1.x, Point1.y, Point2.x, Point2.y, 1);

RenderPoint(Point1.x, Point1.y, 3, {R:100, G:100, B:100});

RenderPoint(Point2.x, Point2.y, 3, {R:100, G:100, B:100});

}

checkpoint

Finally let's tackle cube-rotation animation! We will be using the exact same code as lesson 2, but instead of doing our simplified projection function, we will implement the full rotation equation, which is a bit more complex, but again do your best to read and understand each new block of code line-by-line. The two big changes we will introduce are 1. the camera rotation variable, and how that grows over time, and 2. the application of the rotation algebra. Note that for the sake of our demo, we are rotating the cube's point positions, and not the actual camera. There is no mathematical differences, but it does keep our code more simple and readable.

// 1.1: Add the new global camera rotation variable:

// This should be in global scope

// Camera rotation (Pitch, yaw, roll)

var CameraRot = {x: 0, y: 0, z: 0};

// 1.2: Add the growth of the camera rotation (so we keep rotating every time we render our scene)

// This should be in the beginning of our "RenderScene()" function

// Slightly grow the rotations

CameraRot.x += 0.02;

CameraRot.y += 0.02;

CameraRot.z += 0.02;

// 2.1: Apply the rotation, for each axis

// This should be right above where we applied the projection transformation

// Apply rotation onto the vertex

var Temp = WorkingVertex.z;

WorkingVertex.z = -WorkingVertex.x * Math.sin(CameraRot.y) - WorkingVertex.z * Math.cos(CameraRot.y);

WorkingVertex.x = -WorkingVertex.x * Math.cos(CameraRot.y) + Temp * Math.sin(CameraRot.y);

Temp = WorkingVertex.z;

WorkingVertex.z = -WorkingVertex.y * Math.sin(CameraRot.x) + WorkingVertex.z * Math.cos(CameraRot.x);

WorkingVertex.y = WorkingVertex.y * Math.cos(CameraRot.x) + Temp * Math.sin(CameraRot.x);

Temp = WorkingVertex.x;

WorkingVertex.x = WorkingVertex.x * Math.cos(CameraRot.z) - WorkingVertex.y * Math.sin(CameraRot.z);

WorkingVertex.y = WorkingVertex.y * Math.cos(CameraRot.z) + Temp * Math.sin(CameraRot.z);

// Apply camera translation after the rotation, so we are actually just rotating the object

WorkingVertex.x -= CameraPos.x;

WorkingVertex.y -= CameraPos.y;

WorkingVertex.z -= CameraPos.z;

// 2.2: Apply the projection transformation

// This is where the original 3D to 2D two lines of code are in the vertex iteration for-loop

var ScreenX = (RatioConst * (CubeVertex[i].x - CameraPos.x)) / CubeVertex[i].z;

var ScreenY = (RatioConst * (CubeVertex[i].y - CameraPos.y)) / CubeVertex[i].z;

checkpoint

Note

Trigonometric functions are computationally intensive: they are non-trivial functions that require a bit of work for the computer to do. Classically, computers only take a "step" or two to execute simple math functions, like addition or multiplication, yet functions like Sine and Cosine take much more time because those functions have to execute complex estimation algorithms. Nowadays, these functions are relatively fast, either because of software optimizations, such as using a table-based estimations (i.e. the function just looks up pre-computed values), or because the functions use specialized hardware features within the processor. If you ever need to implement your own trigonometric functions because you believe there are speed issues, be aware that you will have to find a balance between slow-but-accurate and fast-but-inaccurate! On a somewhat related note, the square-root function is commonly used in graphics programming, and there are methods to estimate their results much more quickly than traditional implementations provide. Square-roots are, much like other math functions, generally hardware-accelerated.

Mesh¶

I've mentioned in this article several times how important triangles are because of their special properties as mesh primitives. Let's go ahead and convert our edge-based "wireframe" system into a more correct triangle system, so that we can eventually tackle the problem of rendering a surface! Similar to the edge definition system, where we refer to vertices through their vertex index, we will now define a triangle as three indices. Note that such a 3D model is sometimes referred to as a "mesh", which defines both the model's vertices, and the surface (generally through triangles).

// 1. We replace our "CubeEdge" definition with "CubeFaces"

// This should be in global scope; in the same scope as "CubeFaces" once was

// Cube face data

var CubeFaces =

[

// Front

{ a:0, b:1, c:2, i:1 },

{ a:2, b:3, c:0, i:1 },

// Top

{ a:1, b:5, c:6, i:2 },

{ a:6, b:2, c:1, i:2 },

// Back

{ a:5, b:4, c:7, i:3 },

{ a:7, b:6, c:5, i:3 },

// Bottom

{ a:4, b:0, c:3, i:4 },

{ a:3, b:7, c:4, i:4 },

// Right

{ a:3, b:2, c:6, i:5 },

{ a:6, b:7, c:3, i:5 },

// Left

{ a:0, b:5, c:1, i:6 },

{ a:0, b:4, c:5, i:6 },

];

// 2. Replace the entire edge iteration for-loop with the following:

// This should be in your "RenderScene()" function

// For each face

for(var i = 0; i < CubeFaces.length; i++)

{

// Find the four points we are working on

var PointA = PointList[CubeFaces[i].a];

var PointB = PointList[CubeFaces[i].b];

var PointC = PointList[CubeFaces[i].c];

// Render the face by looking up our vertex list

RenderTriangle(PointA.x, PointA.y, PointB.x, PointB.y, PointC.x, PointC.y, 2);

}

checkpoint

Complex Geometry¶

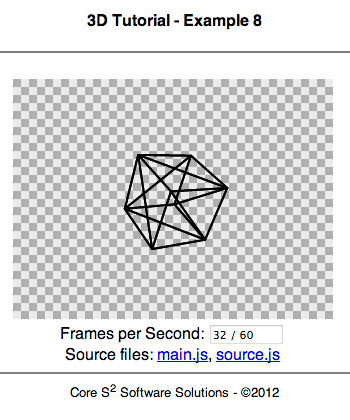

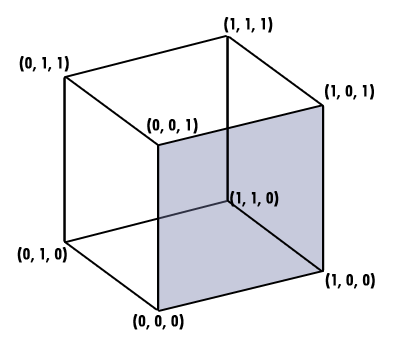

How do we deal with more complex geometry? Turns out you already have everything you need! The existing code-base is ready to parse any number of vertices and surfaces (as long as those surfaces are triangles). As a fun proof-of-concept exercise, let's render the Blender Monkey. Our code only has to change slightly: we first remove all of the cube model information, and instead read the large monkey model data.

// 1. Change the camera position to better see the output model:

// Camera position

var CameraPos = {x: 0, y: -1, z: -5};

// 2. Change the actual model data

// ... Please load the text from the checkpoint source code (too big to include here) ...

// 3. When rendering the model's faces, note that we have to subtract 1 from the indices:

// Note: Source data starts at index 1 ... n, not 0 to n - 1, so we -1

var PointA = PointList[CubeFaces[i].a - 1];

var PointB = PointList[CubeFaces[i].b - 1];

var PointC = PointList[CubeFaces[i].c - 1];

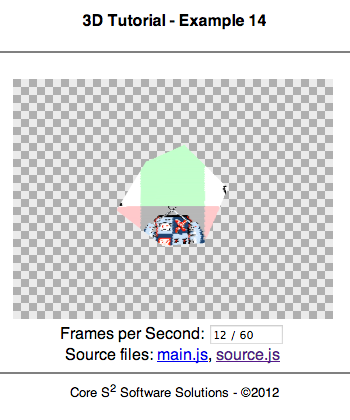

Blender's monkey-face (Suzanne)! If you want, you may choose to continue using this model in the article's code, but be warned: there are 968 triangles in this model, and performance will be terrible!

As an exercise, I leave you with the challenge to write an object-file parser. Object files, ending in *.obj, are a very simple plain-text mesh encoding system, where mesh data is saved as a series of vertices and indices. You should be warned that object files sometimes mix 3-point and 4-point surface primitives, yet in our case we only support 3-point primitives. Either change the file by hand, or write code to handle this. Also, be warned that since this article is all based on a software renderer, any model that has a decent amount of triangles will be very very slow to render.

Note

There are a couple of "famous" 3D models you will find commonly used across platforms and frameworks: these include the Utah Teapot, the Stanford Bunny, and the Cornell Box. Each is famous for being used to introduce or demo important 3D computer graphics technologies in academic publications.

checkpoint

Solid Mesh¶

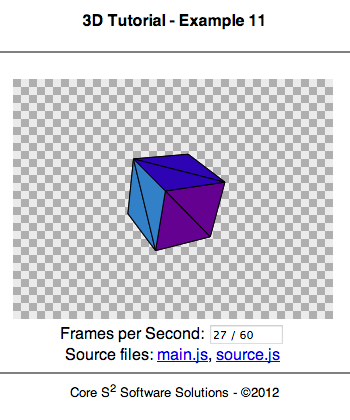

Tired of wireframes? Replace all of the "RenderTriangle(...)" function calls with "RenderFillTriangle(...)"! Since we want to differentiate between each surface, instead of rendering each triangle as black (so that the entire cube looks the same), we will vary the color using a simple little modulo operation applied to the iterator variable and a factor. This guarantees that the colors will be unique for this demo.

// ... This code should replace the pair of "RenderTriangle(...)" function calls ...

// Generate a unique face color

var Color = {R:(CubeFaces[i].i * 50) % 255, G:(CubeFaces[i].i * 128) % 255, B:(CubeFaces[i].i * 200) % 255};

// Render the face by looking up our vertex list

RenderFillTriangle(PointA.x, PointA.y, PointB.x, PointB.y, PointC.x, PointC.y, 2, Color);

RenderTriangle(PointA.x, PointA.y, PointB.x, PointB.y, PointC.x, PointC.y, 1);

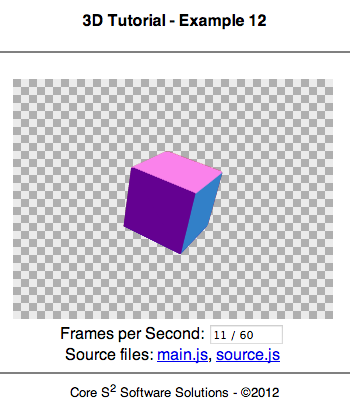

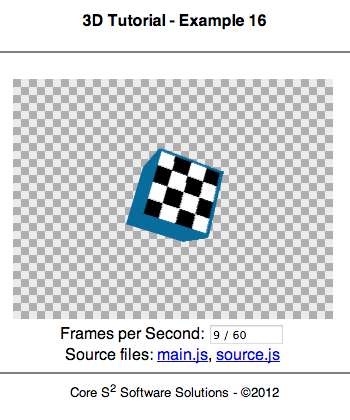

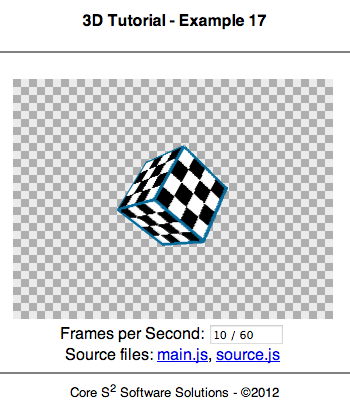

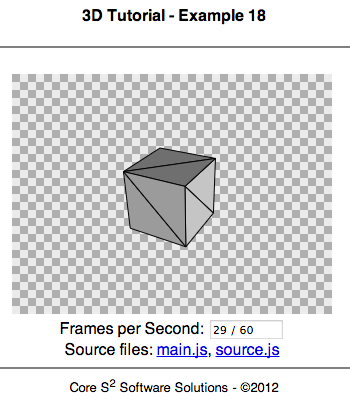

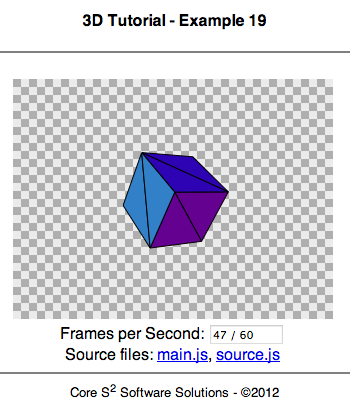

What you should see on your screen! Note that the cube should be rotating. Also note that the sorting error is normal, and to be discussed next.

Whoa! What in the world!? Don't panic! The error you are seeing, where faces are incorrectly placed behind each other, is 100% normal and a great segue into the next section: the depth problem. What is going on is that we are rendering our triangles in the order we defined through our original triangle geometry list, but this order is not correct since we may view the object from behind or at different angles. Why should where we view the object matter? Well, since Canvas is a 2D surface, it maintains no depth, and thus whatever is drawn fist gets covered by anything drawn next. This is called the "painter's problem", and we will discuss how to better choose which triangles to render first!

checkpoint

Surfaces¶

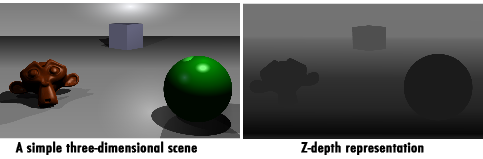

To create the illusion that our objects are solid, we must render the a model's surfaces. We can do this by defining a series of triangles (or polygons) representing the surfaces of an object, just as before. This is called a mesh, and with rasterization we might render triangle surfaces out of order, leading to that bizarre bug seen just before. To solve this problem, we have to investigate into depth-based sorting algorithms that only draw triangles that are visible to the viewer, and not use the ordering provided in the mesh definition.

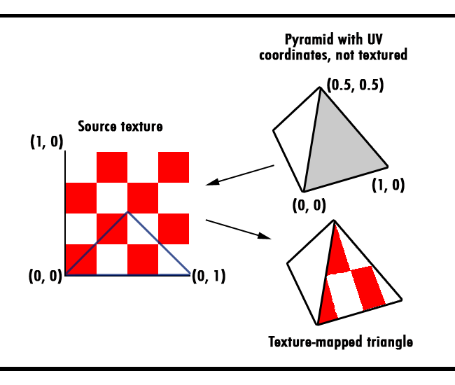

Terms can get easily confused in 3D computer graphics, since words like "texture", "material", and "surface" may appear to be interchangeable, but are not. Their differences have very different implications: a texture, or image, is simply a flat 2D grid made of pixels. A material is the combination of a texture and several properties: reflectivity, transparency, ambient color, etc. The majority of professional 3D applications use materials, since realistic models need to react differently to lights, based on what the object is made out of. Note that a material may sometimes be a combination of several textures and properties, such as the regular texture and a "bump-map texture", which shows surface relief based on the angles of light. Finally, a surface is strictly the geometry primitive that defines opaque elements of a model in 3D space, which are generally triangles. Generally a single model, made up of many surfaces, has a single material applied to it, though complex models may require different materials on different surfaces. Finally, mesh, model, and geometry is just the combination of points and triangles.

Painter's Algorithm¶

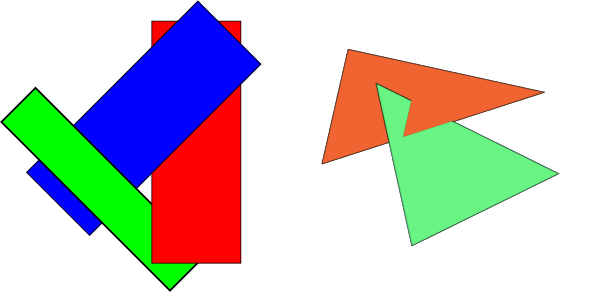

Before continuing, it is critically important that you understand that the layering of what is seen on-screen is only (for now) defined by the order in which we draw things: what is drawn first will always be drawn over by the next render commands. Thus, if we draw the back of an object first, then the front part of the object, it will appear solid. This is because the front-most layer will cover the back-most layer. Otherwise, the output would look severely distorted, just like what you had seen in the previous example.

The painter's algorithm is fast and efficient, only limited by how fast you can sort a list, but it is not very accurate with intersecting faces. The algorithm steps through each triangle in the scene and calculates the distance from the camera. This calculation can be done in several different ways, but it is generally better to average the distance between the three points of the surface triangle. Once you have a list of these distances, you must sort from furthest to closest, and render this sorted list. Why? Just like when painting a picture, you must paint the furthest objects first so that new and closer objects are drawn on-top of objects in the background.

This simple overlay of polygons causes the painter's algorithm to fail, since no polygon can be drawn first, but in the general case this approach is fast and simple enough to understand for our current lesson.

A classic problem in computer science is sorting data: in our case, we only need to sort based on distance, which is a single floating-point value. Wikipedia, as always, has a great article on the problem of sorting algorithms which is well worth a read, but in our case, to keep code as simple as possible, we will be using "bubble-sort". We choose not to use the built-in sorting functions of JavaScript to make all steps in this lesson as clear as possible, since sorting is done on two parallel arrays.

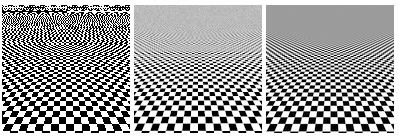

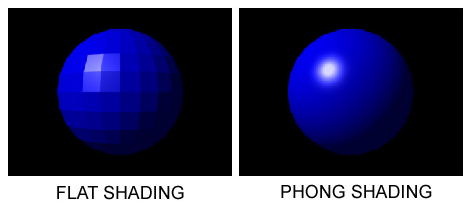

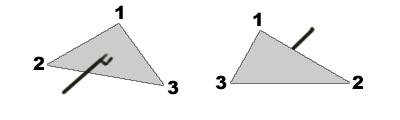

Note